This is part 2D of Comparing Microbiomes from Three Different Providers – Part 1. I decided to do each lab separately and then do an overview at the end. See also

- Ombre Suggestions Analysis – Failing Grade – 2A

- Biomesight Suggestions Analysis – Good Results – 2B

- Thorne Suggestions Analysis – INCOMPLETE / FAILED – 2C

- Comparing standalone suggestions – 2E, a reader wanted to know how similar Microbiome Prescription suggestions were using different data

In this post we are going to combine all of the consensus from the above 3 different sample reports and see what is shared by all of the suggestions. The goal is to see whether there is come convergence of suggestions.

Uber Consensus

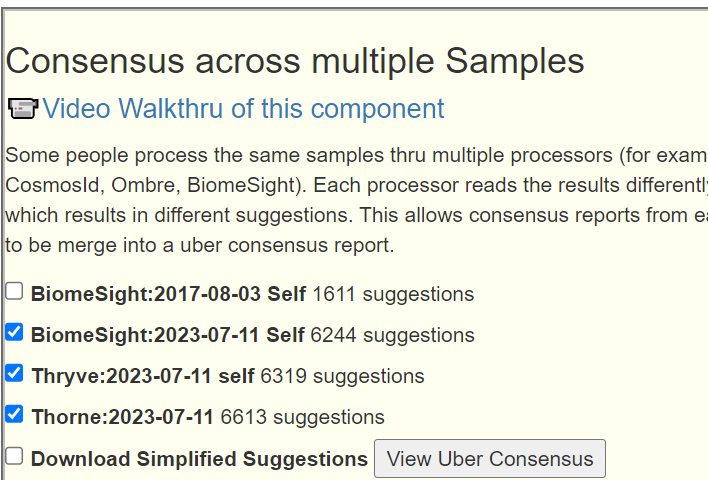

We select the Multiple Samples tab and then check the three consensus reports. We should note the number of modifiers in each sample suggestions (over 6000 items were consider). This on the surface appears to be at least one, if not two magnitudes more than the suggestions from the labs,

The following are selecting the highest positive or negative entries where there is good agreement.

Commentary

There were no probiotics in the above to take, only those to avoid. Interesting that the labs whose business model includes selling probiotics actually suggested these probiotics (ones to be avoided above)!! This have a strong aroma of conflict of interests.

Many of the above items were not suggested by any lab despite a few being typical — i.e. melatonin.

Other Observations

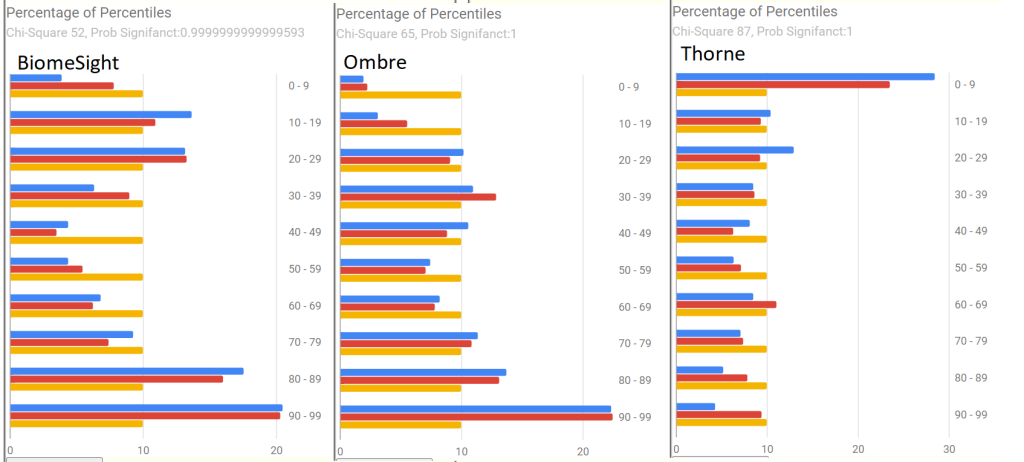

Percentages of Percentiles

For BiomeSight and Ombre, we compute percentiles based on samples uploaded. Thorne provides their own percentiles. We see a major contrast below.

| Measure | BiomeSight | Ombre | Thorne |

| Jason Hawrelak | 8 ideal (96%ile) | 6 ideal (75%ile) | 5 ideal (56%ile) |

| Bacteria Reported | 748 | 886 | 3349 |

| Shannon Diversity Index: | 1.93 (89%ile) | 3.34 (93%ile) | 2.85 (70%ile) |

| Simpson Diversity Index: | 0.2 (8%ile) | 0.2 (5%ile) | 0.3 (9%ile) |

| Chao1 Index : | 17785 (89%ile) | 33700 (89%ile) | 341848 (70%ile) |

Bottom Line

The purpose of this series of post was to do a non-judgmental evaluation of the three lab reports and suggestions to help people make better choices. All of the steps that I did is very repeatable by anyone who wish to replicate this experiment. (P.S. If you do, I am not opposed to do a repeat set of posts with different data).

- Key findings:

- Only Biomesight provided AVOID lists (too short IMHO) — i.e. they are happy for you to keep feeding ‘bad’ bacteria

- Only Biomesight provide studies links connected to their suggestions

- The report from each lab are significantly different, however when that report is used with Microbiome Prescription algorithms, we get agreement. This is likely due to the nature of the algorithms used.

My impression is to use whichever lab is available to you (two sell in the US only, one world wide); ignore their suggestions and use the free suggestion engine on Microbiome Prescription.

Microbiome Prescription does provide detail evidence trail on every single suggestion it makes. Some of the evidence is less than ideal, but it is at least reasonable (and less than ideal data is diminished in weight).

I gave this an Excellent because it matched the criteria that I use:

- Avoid lists are given

- Evidence trail to studies for every suggestion

- A large number of substances are evaluated

- Weights are given for Take lists.

(And I acknowledge there is a conflict of interests here — but no financial gain).

The following videos illustrate the process to see the evidence trail.

I would be interested to see how the three separate consensus suggestions compare (i.e. not doing the uber consensus). Do the top takes & avoids match across the different labs, or are they different? Because if they are different then the algorithm is not robust to changes in lab.

That is a good question. I will do a short blog post later this weekend trying to answer that question.

Gut feeling is about 80+% concurrence… we’ll see.